Artificial intelligence (AI) is the ability for machines to reproduce human or animal capacities such as problem-solving. One of AI’s subdomain is Machine Learning (ML), whose goal is to make computers learning business rules without the need of the business knowledge but only by giving the computer the data. As part of ML methods, Deep Learning (DL) is based on data representation. The philosophy behind this concept is to mimic the brain’s processing pattern in order to define the relationship between stimuli. This has led to a layer organisation of algorithms, particularly efficient in computer vision field. In some cases, these algorithms are able to overcome human abilities.

To reach such performances, two kinds of neural networks are used:

Convolutional Neural networks (CNN): These networks are dedicated to object recognition. They are made of convolutional layers (filters) and pooling layers.

Recurrent neural networks (RNN): These networks allow to add a temporal component to object recognition and thus do activity detection. This is possible thanks to information sharing in between ordered images over time.

As they tend to mimic human capacities machine learning algorithms couldn’t just rely on basic programming languages without dealing with too much complexity. This is why the last 10 years several tech companies have worked on the release of a framework to help to publicize ML. This has as an effect to extend ML use to the overall developer community.

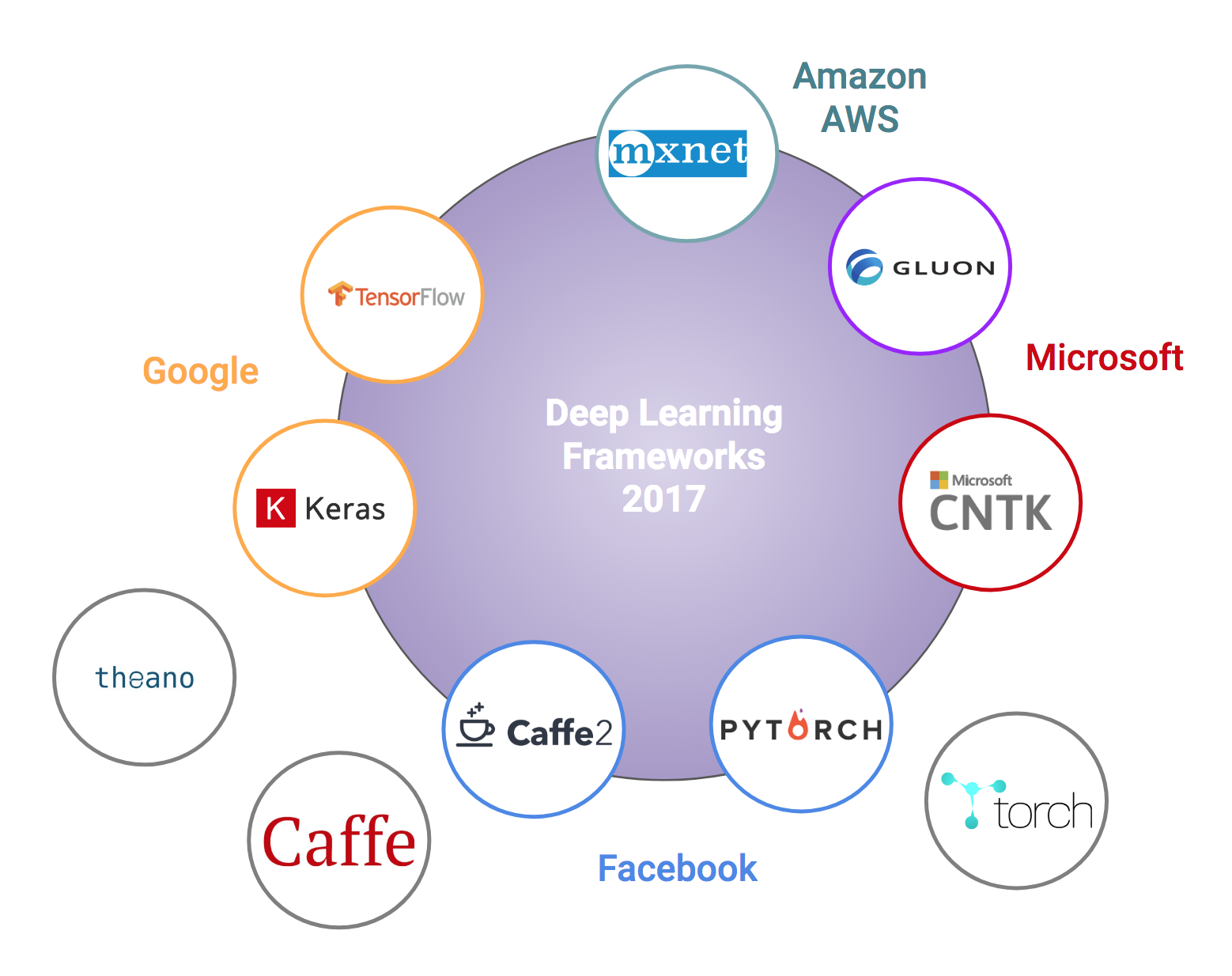

In this framework world, big tech companies such as Google and Facebook are, by far, leaders of this market (fig. 1).

Fig1. State of open source deep learning frameworks

Fig1. State of open source deep learning frameworks

Working on emerging edge technologies is never easy. This is why when choosing a framework, community knowledge is a plus. Knowing this we looked at the most popular frameworks in DL field and Tensorflow[5], Caffe[6] and Pytorch seemed to lead the market. Note that we do not consider Theano even though it is sustained by pioneers in ML because its editor announced the end of development in 2018.

We then started to compare Tensorflow, Caffe and Pytorch. Let’s first resume each framework’s history and capacities before highlighting the choice we made for this project.

Tensorflow:

The framework sustained by Google company. It is largely based on Theano and is considered as its successor as some of Theano’s creators are taking part in the project. It has been developed by the Google Brain team, initially for internal use. In November 2015, Tensorflow has been released under Apache 2.0 open source license.

This DL framework is based on a C++ back-end which can be run on CPU, Nvidia CUDA GPU, Android, IOS and OSX. It uses a kind of graph called static, meaning that it is built once and executed many times. Tensorflow is a very low-level library allowing fine tuning but also adding some complexity[3]. It can also be run through several APIs such as:

-

Python

-

C++

-

R

The development community behind Tensorflow is huge based on Github stars [5]. Moreover, because it is backed by Google, Tensorflow community is able to release quick and frequent updates.

Here are some strengths of Tensorflow:

-

Tensorboard for visualization

-

Data and model parallelism

-

Deploy on cellular devices as well as complex setups

-

Pipelining ability

-

High-level API: TF-Learn, Keras, TF-Slim

-

Can easily handle video, images, text, audio

Aside from this, Tensorflow also lets the developer execute subparts of learning graphs which is useful for debugging.

As nothing is perfect, Tensorflow has also some weaknesses:

-

No Windows support

-

GPU support only on Nvidia

In order to attract people not interested in fine-tuning, Tensorflow released several high-level APIs:

Keras: This is a high-level API written in Python. It focuses on enabling fast experimentation by adding an abstraction level to Tensorflow. Keras’s goal is to reduce the user’s cognitive load while developing DL features. The focus has been made on user-friendliness and modularity (neural layers, optimizers… are standalone modules) [13].

TF-Learn: TFlearn is a modular and transparent Deep Learning library built on top of Tensorflow. It was designed to provide a higher-level API to TensorFlow in order to facilitate and speed-up experimentations while remaining fully transparent and compatible with it. [14]

As a conclusion, Tensorflow is a great library which can be used for numerical and graphical computation of data in creating deep learning networks. It is the most widely used library for various applications. The uniqueness of TensorFlow also lies in dataflow graphs – structures that consist of nodes (mathematical operations) and edges (numerical arrays or tensors). It can be easily installed. Its applications go beyond deep learning to support other forms of machine learning like reinforcement learning. The community supporting Tensorflow is the biggest for the moment and keep on growing. Finally, TensorFlow is a low-level library that requires ample code writing and a good understanding of data science specifics to start successfully working with the product.

Caffe:

This project has started at the University of California, Berkeley and is now under Facebook’s guidance. Caffe is released under the BSD 2-Clause license. It is based on C++ and CUDA backend. Caffe is supported in many platforms such as OSX, Ubuntu, Centos, Windows, IOS, Android, Raspbian. Caffe2 comes with C++ and Python APIs. Caffe is a high-level library that relies on loading model configurations from prototxt (protobuf txt) files.

Caffe’s strengths:

-

Applications in machine learning, vision, speech and multimedia.

-

Good for feed-forward networks.

-

Python and MATLAB interfaces

-

Flexible

-

Good for (convolutional neural networks) CNN

Caffe’s weaknesses:

-

Not intended for text, sound or time series data.

-

Need of source code to template custom one

-

The model defined in protobuf

-

Not good for (Recurrent neural networks) RNN.

When it was born, Caffe was an excellent tool. Today it has been overwhelmed by others and lacks some evolutions.

In April 2017 Caffe2 has been released by Facebook. It has been added some features such as RNN support for text translation and speech processing. This said, Caffe2 suffer a lack of support from the community and still need to prove its value. The Caffe team claim that learning part can be skipped as it is easy to dive into simple DL models but the lack of clear documentation is a real bottleneck. Caffe allows to fastly deploy already written model but fine tuning turned out to be very demanding.

Pytorch:

Pytorch is also an open source project and it has also been backed up by Facebook. Pytorch is based on Torch which was one of the pioneers in machine learning frameworks. As its name suggests pytorch is a python package providing high-level features. It is mainly a way to replace numpy (a python library) in order to use GPU power. It also offers a DL platform that enhances flexibility and speed. Torch was first designed for research, this is its popularity that leads to the development of a Python version and its support by Facebook [11].

PyTorch has a unique way of building neural networks: using and replaying a tape recorder. It uses a technique that to change the way a network behaves with zero lag, this technique is called a dynamic graph, as opposed to Tensorflow’s static graphs [3].

Pythorch is based on C / CUDA backend and is released for most of the platforms. Its installation is quite easy. Pytorch allows extending functionality by defining your own classes to extend it. Its front-end is based on Lua, a not very popular programming language and can be problematic for later integration.

Pytorch’s strengths:

-

Flexibility

-

Modularity

Pytorch’s weaknesses:

-

Not a lot of projects

-

Based on Lua

-

Not suitable for production

Finally, even though Pytorch is less mature than Tensorflow, it is gaining some popularity within the research community mainly due to its Pythonic API. Its community is not very furnished yet and it lacks some model resources but its easiness is a real plus.

As I am not an expert in any of the three discussed framework, I will try them in the following weeks and try to give you objective feedback on my usage of them for very basic tasks. The overview might seem to be a little biased toward Tensorflow but this is mainly due to its popularity among the community. I hope I’ll be able to update this post later and furnish a better definition of Caffe and Pytorch. I am also interested in following the development of the new framework that will emerge from Caffe and Pytorch fusion.

I hope that these lines helped you a little to understand a little bit better the differences between the three frameworks.