Exploring the world within and bring clarity to your data

Start a moving journey to take control over your data management while keeping an eye on its social and ecological impact.

Nestled in the bask mountains, I keep a deep relationship with nature and the ocean. My journey as a scientist is shaped by my focus on both technique and biology.

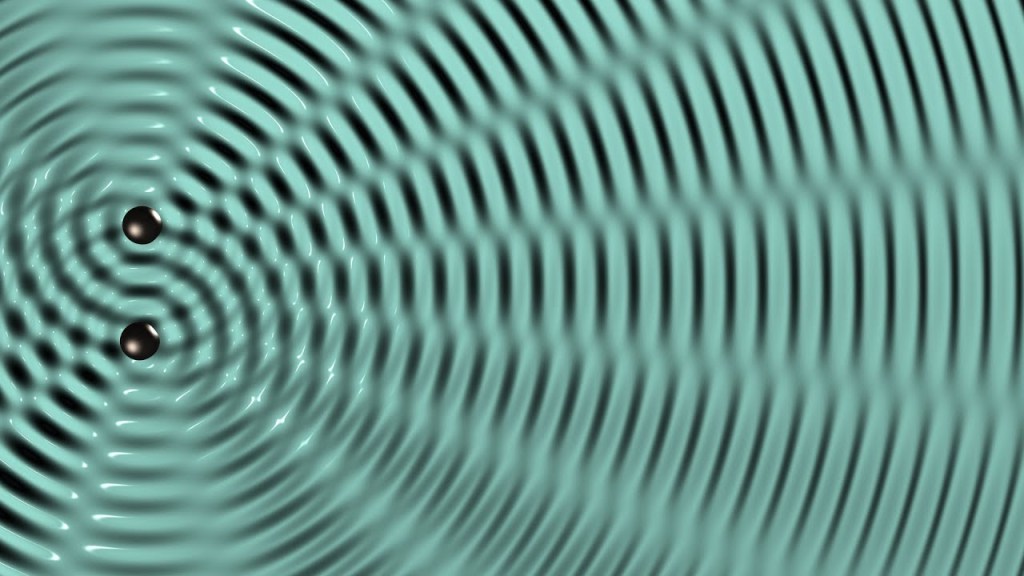

Explore Networks

Everything is interconnected. Your data are the reflection of your organisation.

Enjoy Nature

Nature is an infinite source of inspiration and wisdom. In my journey as a scientist, Nature has always kept a growing place and helped align work with my values at the intersection of technique, biology and philosophy…

-

The Return-to-Office Movement: Beyond the Surface of a Corporate Trend

A critical examination of the motivations, justifications, and implications behind the push to bring employees back to physical offices The Great Reversal Six years…